- Airflow Basehook Connection

- Airflow Basehook Get_connection

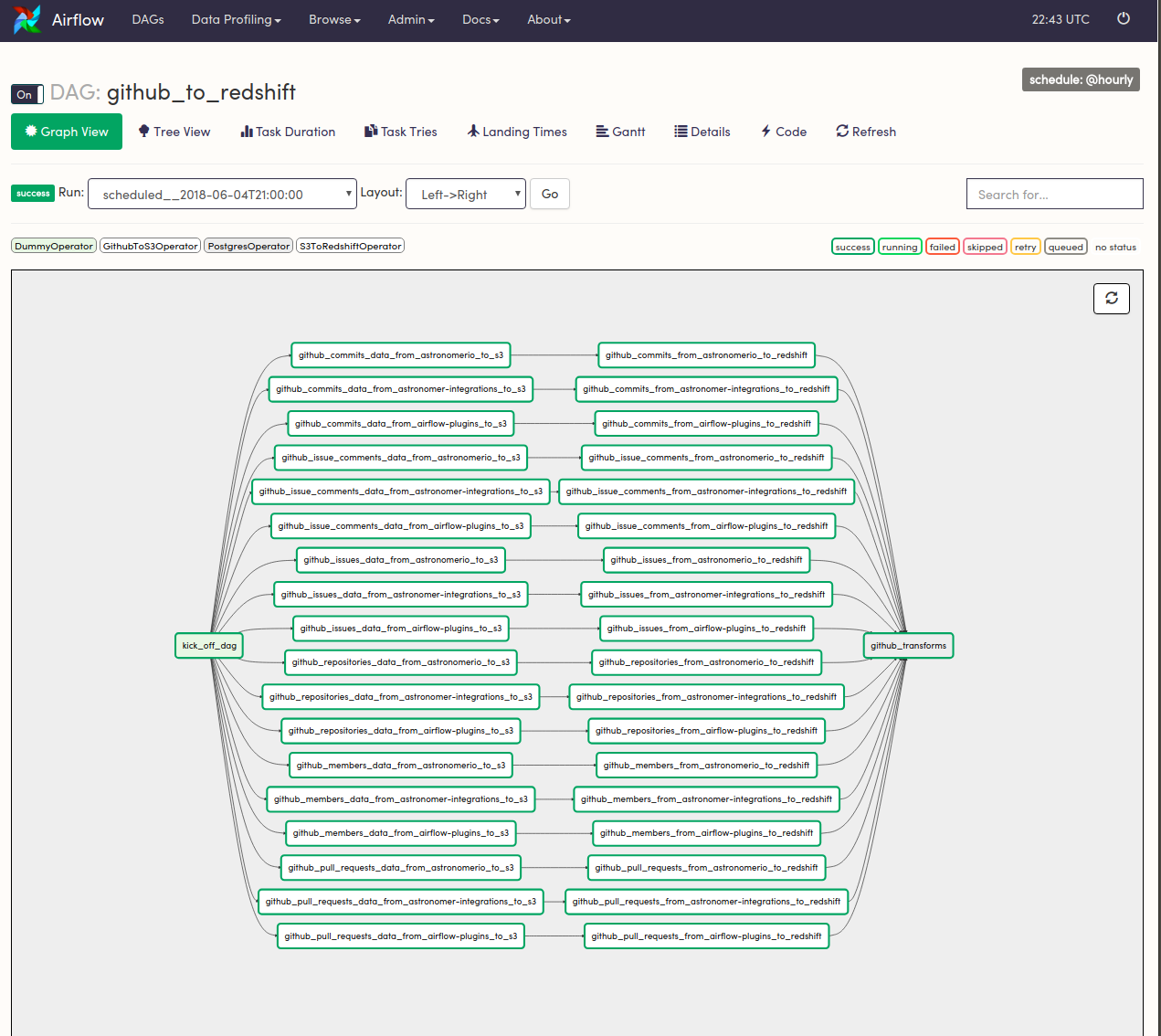

- Airflow Basehook Example

- Airflow Basehook Github

BaseHook (source) source ¶ Bases: airflow.utils.log.loggingmixin.LoggingMixin Abstract base class for hooks, hooks are meant as an interface to interact with external systems.

Module Contents¶

- See the License for the # specific language governing permissions and limitations # under the License. From past.builtins import unicode import cloudant from airflow.exceptions import AirflowException from airflow.hooks.basehook import BaseHook from airflow.utils.log.loggingmixin import LoggingMixin.

- Source code for airflow.hooks.dbapi # # Licensed to the Apache Software Foundation (ASF) under one # or more contributor license agreements. See the NOTICE file # distributed with this work for additional information # regarding copyright ownership.

- Exceptions import AirflowException: from airflow. Base import BaseHook: class AzureBaseHook (BaseHook): ' This hook acts as a base hook for azure services. It offers several authentication mechanisms to: authenticate the client library used for upstream azure hooks.:param sdkclient: The SDKClient to use.

airflow.hooks.hive_hooks.HIVE_QUEUE_PRIORITIES= ['VERY_HIGH', 'HIGH', 'NORMAL', 'LOW', 'VERY_LOW'][source]¶

airflow.hooks.hive_hooks.get_context_from_env_var()[source]¶Extract context from env variable, e.g. dag_id, task_id and execution_date,so that they can be used inside BashOperator and PythonOperator.The context of interest.

airflow.hooks.hive_hooks.HiveCliHook(hive_cli_conn_id='hive_cli_default', run_as=None, mapred_queue=None, mapred_queue_priority=None, mapred_job_name=None)[source]¶Bases: airflow.hooks.base_hook.BaseHook

Simple wrapper around the hive CLI.

It also supports the beelinea lighter CLI that runs JDBC and is replacing the heaviertraditional CLI. To enable beeline, set the use_beeline param in theextra field of your connection as in {'use_beeline':true}

Note that you can also set default hive CLI parameters using thehive_cli_params to be used in your connection as in{'hive_cli_params':'-hiveconfmapred.job.tracker=some.jobtracker:444'}Parameters passed here can be overridden by run_cli's hive_conf param

The extra connection parameter auth gets passed as in the jdbc Twitch beatport. connection string as is.

mapred_queue (str) – queue used by the Hadoop Scheduler (Capacity or Fair)

mapred_queue_priority (str) – priority within the job queue.Possible settings include: VERY_HIGH, HIGH, NORMAL, LOW, VERY_LOW

mapred_job_name (str) – This name will appear in the jobtracker.This can make monitoring easier.

_get_proxy_user(self)[source]¶This function set the proper proxy_user value in case the user overwtire the default.

_prepare_cli_cmd(self)[source]¶This function creates the command list from available information

_prepare_hiveconf(d)[source]¶This function prepares a list of hiveconf paramsfrom a dictionary of key value pairs.

d (dict) –

run_cli(self, hql, schema=None, verbose=True, hive_conf=None)[source]¶Run an hql statement using the hive cli. If hive_conf is specifiedit should be a dict and the entries will be set as key/value pairsin HiveConf

hive_conf (dict) – if specified these key value pairs will be passedto hive as -hiveconf'key'='value'. Note that they will bepassed after the hive_cli_params and thus will overridewhatever values are specified in the database.

test_hql(self, hql)[source]¶Test an hql statement using the hive cli and EXPLAIN

load_df(self, df, table, field_dict=None, delimiter=', ', encoding='utf8', pandas_kwargs=None, **kwargs)[source]¶Loads a pandas DataFrame into hive.

Hive data types will be inferred if not passed but column names willnot be sanitized.

df (pandas.DataFrame) – DataFrame to load into a Hive table

table (str) – target Hive table, use dot notation to target aspecific database

field_dict (collections.OrderedDict) – mapping from column name to hive data type.Note that it must be OrderedDict so as to keep columns' order.

delimiter (str) – field delimiter in the file

encoding (str) – str encoding to use when writing DataFrame to file

pandas_kwargs (dict) – passed to DataFrame.to_csv

kwargs – passed to self.load_file

load_file(self, filepath, table, delimiter=', ', field_dict=None, create=True, overwrite=True, partition=None, recreate=False, tblproperties=None)[source]¶Loads a local file into Hive

Note that the table generated in Hive uses STOREDAStextfilewhich isn't the most efficient serialization format. If alarge amount of data is loaded and/or if the tables getsqueried considerably, you may want to use this operator only tostage the data into a temporary table before loading it into itsfinal destination using a HiveOperator.

filepath (str) – local filepath of the file to load

table (str) – target Hive table, use dot notation to target aspecific database

delimiter (str) – field delimiter in the file

field_dict (collections.OrderedDict) – A dictionary of the fields name in the fileas keys and their Hive types as values.Note that it must be OrderedDict so as to keep columns' order.

create (bool) – whether to create the table if it doesn't exist

overwrite (bool) – whether to overwrite the data in table or partition

partition (dict) – target partition as a dict of partition columnsand values

recreate (bool) – whether to drop and recreate the table at everyexecution

tblproperties (dict) – TBLPROPERTIES of the hive table being created

kill(self)[source]¶

airflow.hooks.hive_hooks.HiveMetastoreHook(metastore_conn_id='metastore_default')[source]¶Bases: airflow.hooks.base_hook.BaseHook

Wrapper to interact with the Hive Metastore

MAX_PART_COUNT= 32767[source]¶

__getstate__(self)[source]¶

__setstate__(self, d)[source]¶

get_metastore_client(self)[source]¶Returns a Hive thrift client.

get_conn(self)[source]¶

check_for_partition(self, schema, table, partition)[source]¶Checks whether a partition exists

schema (str) – Name of hive schema (database) @table belongs to

table – Name of hive table @partition belongs to

Expression that matches the partitions to check for(eg a = ‘b' AND c = ‘d')

check_for_named_partition(self, schema, table, partition_name)[source]¶Checks whether a partition with a given name exists

schema (str) – Name of hive schema (database) @table belongs to

table – Name of hive table @partition belongs to

Name of the partitions to check for (eg a=b/c=d)

get_table(self, table_name, db='default')[source]¶Get a metastore table object

get_tables(self, db, pattern='*')[source]¶Get a metastore table object Garmin gtu 10 alternative.

get_databases(self, pattern='*')[source]¶Get a metastore table object

get_partitions(self, schema, table_name, filter=None)[source]¶Returns a list of all partitions in a table. Works onlyfor tables with less than 32767 (java short max val).For subpartitioned table, the number might easily exceed this.

_get_max_partition_from_part_specs(part_specs, partition_key, filter_map)[source]¶Helper method to get max partition of partitions with partition_keyfrom part specs. key:value pair in filter_map will be used tofilter out partitions.

part_specs (list) – list of partition specs.

partition_key (str) – partition key name.

filter_map (map) – partition_key:partition_value map used for partition filtering,e.g. {‘key1': ‘value1', ‘key2': ‘value2'}.Only partitions matching all partition_key:partition_valuepairs will be considered as candidates of max partition.

Max partition or None if part_specs is empty.

max_partition(self, schema, table_name, field=None, filter_map=None)[source]¶Returns the maximum value for all partitions with given field in a table.If only one partition key exist in the table, the key will be used as field.filter_map should be a partition_key:partition_value map and will be used tofilter out partitions.

schema (str) – schema name.

table_name (str) – table name.

field (str) – partition key to get max partition from.

filter_map (map) – partition_key:partition_value map used for partition filtering.

table_exists(self, table_name, db='default')[source]¶Check if table exists

airflow.hooks.hive_hooks.HiveServer2Hook(hiveserver2_conn_id='hiveserver2_default')[source]¶Bases: airflow.hooks.base_hook.BaseHook

Wrapper around the pyhive library

Notes:* the default authMechanism is PLAIN, to override it youcan specify it in the extra of your connection in the UI* the default for run_set_variable_statements is true, if youare using impala you may need to set it to false in theextra of your connection in the UI

get_conn(self, schema=None)[source]¶Returns a Hive connection object.

_get_results(self, hql, schema='default', fetch_size=None, hive_conf=None)[source]¶

get_results(self, hql, schema='default', fetch_size=None, hive_conf=None)[source]¶

Get results of the provided hql in target schema.

hql (str or list) – hql to be executed.

schema (str) – target schema, default to ‘default'.

fetch_size (int Balcao pia kapesberg. ) – max size of result to fetch.

hive_conf (dict) – hive_conf to execute alone with the hql.

results of hql execution, dict with data (list of results) and header

to_csv(self, hql, csv_filepath, schema='default', delimiter=', ', lineterminator='rn', output_header=True, fetch_size=1000, hive_conf=None)[source]¶Execute hql in target schema and write results to a csv file.

hql (str or list) – hql to be executed.

csv_filepath (str) – filepath of csv to write results into.

schema (str) – target schema, default to ‘default'.

delimiter (str) – delimiter of the csv file, default to ‘,'.

lineterminator (str) – lineterminator of the csv file.

output_header (bool) – header of the csv file, default to True.

fetch_size (int) – number of result rows to write into the csv file, default to 1000.

hive_conf (dict) – hive_conf to execute alone with the hql.

get_records(self, hql, schema='default')[source]¶Get a set of records from a Hive query.

hql (str or list) – hql to be executed.

schema (str) – target schema, default to ‘default'.

hive_conf (dict) – hive_conf to execute alone with the hql.

result of hive execution

get_pandas_df(self, hql, schema='default')[source]¶Get a pandas dataframe from a Hive query

hql (str or list) – hql to be executed.

schema (str) – target schema, default to ‘default'.

result of hql execution

DataFrame

pandas.DateFrame

Latest versionReleased:

A Pylint plugin to lint Apache Airflow code.

Project description

Pylint plugin for static code analysis on Airflow code.

Usage

Installation:

Usage:

This plugin runs on Python 3.6 and higher.

Error codes

The Pylint-Airflow codes follow the structure {I,C,R,W,E,F}83{0-9}{0-9}, where:

- The characters show:

- I = Info

- C = Convention

- R = Refactor

- W = Warning

- E = Error

- F = Fatal

- 83 is the base id (see all here https://github.com/PyCQA/pylint/blob/master/pylint/checkers/__init__.py)

- {0-9}{0-9} is any number 00-99

The current codes are:

| Code | Symbol | Description |

|---|---|---|

| C8300 | different-operator-varname-taskid | For consistency assign the same variable name and task_id to operators. |

| C8301 | match-callable-taskid | For consistency name the callable function ‘_[task_id]', e.g. PythonOperator(task_id='mytask', python_callable=_mytask). |

| C8302 | mixed-dependency-directions | For consistency don't mix directions in a single statement, instead split over multiple statements. |

| C8303 | task-no-dependencies | Sometimes a task without any dependency is desired, however often it is the result of a forgotten dependency. |

| C8304 | task-context-argname | Indicate you expect Airflow task context variables in the **kwargs argument by renaming to **context. |

| C8305 | task-context-separate-arg | To avoid unpacking kwargs from the Airflow task context in a function, you can set the needed variables as arguments in the function. |

| C8306 | match-dagid-filename | For consistency match the DAG filename with the dag_id. |

| R8300 | unused-xcom | Return values from a python_callable function or execute() method are automatically pushed as XCom. |

| W8300 | basehook-top-level | Airflow executes DAG scripts periodically and anything at the top level of a script is executed. Therefore, move BaseHook calls into functions/hooks/operators. |

| E8300 | duplicate-dag-name | DAG name should be unique. |

| E8301 | duplicate-task-name | Task name within a DAG should be unique. |

| E8302 | duplicate-dependency | Task dependencies can be defined only once. |

| E8303 | dag-with-cycles | A DAG is acyclic and cannot contain cycles. |

| E8304 | task-no-dag | A task must know a DAG instance to run. |

Documentation

Documentation is available on Read the Docs.

Contributing

Suggestions for more checks are always welcome, please create an issue on GitHub. Read CONTRIBUTING.rst for more details.

Release historyRelease notifications | RSS feed

Download files

Download the file for your platform. If you're not sure which to choose, learn more about installing packages.

| Filename, size | File type | Python version | Upload date | Hashes |

|---|---|---|---|---|

| Filename, size pylint_airflow-0.1.0a1-py3-none-any.whl (9.5 kB) | File type Wheel | Python version py3 | Upload date | Hashes |

| Filename, size pylint-airflow-0.1.0a1.tar.gz (7.0 kB) | File type Source | Python version None | Upload date | Hashes |

check_for_partition(self, schema, table, partition)[source]¶Checks whether a partition exists

schema (str) – Name of hive schema (database) @table belongs to

table – Name of hive table @partition belongs to

Expression that matches the partitions to check for(eg a = ‘b' AND c = ‘d')

check_for_named_partition(self, schema, table, partition_name)[source]¶Checks whether a partition with a given name exists

schema (str) – Name of hive schema (database) @table belongs to

table – Name of hive table @partition belongs to

Name of the partitions to check for (eg a=b/c=d)

get_table(self, table_name, db='default')[source]¶Get a metastore table object

get_tables(self, db, pattern='*')[source]¶Get a metastore table object Garmin gtu 10 alternative.

get_databases(self, pattern='*')[source]¶Get a metastore table object

get_partitions(self, schema, table_name, filter=None)[source]¶Returns a list of all partitions in a table. Works onlyfor tables with less than 32767 (java short max val).For subpartitioned table, the number might easily exceed this.

_get_max_partition_from_part_specs(part_specs, partition_key, filter_map)[source]¶Helper method to get max partition of partitions with partition_keyfrom part specs. key:value pair in filter_map will be used tofilter out partitions.

part_specs (list) – list of partition specs.

partition_key (str) – partition key name.

filter_map (map) – partition_key:partition_value map used for partition filtering,e.g. {‘key1': ‘value1', ‘key2': ‘value2'}.Only partitions matching all partition_key:partition_valuepairs will be considered as candidates of max partition.

Max partition or None if part_specs is empty.

max_partition(self, schema, table_name, field=None, filter_map=None)[source]¶Returns the maximum value for all partitions with given field in a table.If only one partition key exist in the table, the key will be used as field.filter_map should be a partition_key:partition_value map and will be used tofilter out partitions.

schema (str) – schema name.

table_name (str) – table name.

field (str) – partition key to get max partition from.

filter_map (map) – partition_key:partition_value map used for partition filtering.

table_exists(self, table_name, db='default')[source]¶Check if table exists

airflow.hooks.hive_hooks.HiveServer2Hook(hiveserver2_conn_id='hiveserver2_default')[source]¶Bases: airflow.hooks.base_hook.BaseHook

Wrapper around the pyhive library

Notes:* the default authMechanism is PLAIN, to override it youcan specify it in the extra of your connection in the UI* the default for run_set_variable_statements is true, if youare using impala you may need to set it to false in theextra of your connection in the UI

get_conn(self, schema=None)[source]¶Returns a Hive connection object.

_get_results(self, hql, schema='default', fetch_size=None, hive_conf=None)[source]¶

get_results(self, hql, schema='default', fetch_size=None, hive_conf=None)[source]¶Get results of the provided hql in target schema.

hql (str or list) – hql to be executed.

schema (str) – target schema, default to ‘default'.

fetch_size (int Balcao pia kapesberg. ) – max size of result to fetch.

hive_conf (dict) – hive_conf to execute alone with the hql.

results of hql execution, dict with data (list of results) and header

to_csv(self, hql, csv_filepath, schema='default', delimiter=', ', lineterminator='rn', output_header=True, fetch_size=1000, hive_conf=None)[source]¶Execute hql in target schema and write results to a csv file.

hql (str or list) – hql to be executed.

csv_filepath (str) – filepath of csv to write results into.

schema (str) – target schema, default to ‘default'.

delimiter (str) – delimiter of the csv file, default to ‘,'.

lineterminator (str) – lineterminator of the csv file.

output_header (bool) – header of the csv file, default to True.

fetch_size (int) – number of result rows to write into the csv file, default to 1000.

hive_conf (dict) – hive_conf to execute alone with the hql.

get_records(self, hql, schema='default')[source]¶Get a set of records from a Hive query.

hql (str or list) – hql to be executed.

schema (str) – target schema, default to ‘default'.

hive_conf (dict) – hive_conf to execute alone with the hql.

result of hive execution

get_pandas_df(self, hql, schema='default')[source]¶Get a pandas dataframe from a Hive query

hql (str or list) – hql to be executed.

schema (str) – target schema, default to ‘default'.

result of hql execution

DataFrame

pandas.DateFrame

Latest versionReleased:

A Pylint plugin to lint Apache Airflow code.

Project description

Pylint plugin for static code analysis on Airflow code.

Usage

Installation:

Usage:

This plugin runs on Python 3.6 and higher.

Error codes

The Pylint-Airflow codes follow the structure {I,C,R,W,E,F}83{0-9}{0-9}, where:

- The characters show:

- I = Info

- C = Convention

- R = Refactor

- W = Warning

- E = Error

- F = Fatal

- 83 is the base id (see all here https://github.com/PyCQA/pylint/blob/master/pylint/checkers/__init__.py)

- {0-9}{0-9} is any number 00-99

The current codes are:

| Code | Symbol | Description |

|---|---|---|

| C8300 | different-operator-varname-taskid | For consistency assign the same variable name and task_id to operators. |

| C8301 | match-callable-taskid | For consistency name the callable function ‘_[task_id]', e.g. PythonOperator(task_id='mytask', python_callable=_mytask). |

| C8302 | mixed-dependency-directions | For consistency don't mix directions in a single statement, instead split over multiple statements. |

| C8303 | task-no-dependencies | Sometimes a task without any dependency is desired, however often it is the result of a forgotten dependency. |

| C8304 | task-context-argname | Indicate you expect Airflow task context variables in the **kwargs argument by renaming to **context. |

| C8305 | task-context-separate-arg | To avoid unpacking kwargs from the Airflow task context in a function, you can set the needed variables as arguments in the function. |

| C8306 | match-dagid-filename | For consistency match the DAG filename with the dag_id. |

| R8300 | unused-xcom | Return values from a python_callable function or execute() method are automatically pushed as XCom. |

| W8300 | basehook-top-level | Airflow executes DAG scripts periodically and anything at the top level of a script is executed. Therefore, move BaseHook calls into functions/hooks/operators. |

| E8300 | duplicate-dag-name | DAG name should be unique. |

| E8301 | duplicate-task-name | Task name within a DAG should be unique. |

| E8302 | duplicate-dependency | Task dependencies can be defined only once. |

| E8303 | dag-with-cycles | A DAG is acyclic and cannot contain cycles. |

| E8304 | task-no-dag | A task must know a DAG instance to run. |

Documentation

Documentation is available on Read the Docs.

Contributing

Suggestions for more checks are always welcome, please create an issue on GitHub. Read CONTRIBUTING.rst for more details.

Release historyRelease notifications | RSS feed

Download files

Download the file for your platform. If you're not sure which to choose, learn more about installing packages.

| Filename, size | File type | Python version | Upload date | Hashes |

|---|---|---|---|---|

| Filename, size pylint_airflow-0.1.0a1-py3-none-any.whl (9.5 kB) | File type Wheel | Python version py3 | Upload date | Hashes |

| Filename, size pylint-airflow-0.1.0a1.tar.gz (7.0 kB) | File type Source | Python version None | Upload date | Hashes |

Airflow Basehook Connection

CloseHashes for pylint_airflow-0.1.0a1-py3-none-any.whl

Airflow Basehook Get_connection

| Algorithm | Hash digest |

|---|---|

| SHA256 | 530a43e902831f2806dc3f672c7eb46ac1378106c400a04dce2ea1ccd5c4d0c3 |

| MD5 | c8fba07d31ad7d45b16f91212414c971 |

| BLAKE2-256 | 5f795fff2161bfecfc249eb8050685e2403488d83290ac4e6237781176f3968e |

Airflow Basehook Example

CloseHashes for pylint-airflow-0.1.0a1.tar.gz

Airflow Basehook Github

| Algorithm | Hash digest |

|---|---|

| SHA256 | a0e3edb13932f35b24b24e66ebe596541600575b755b78a2a33d74d8bf94b620 |

| MD5 | d8d8fa0b1ad4f553a1cfd2ab59141e51 |

| BLAKE2-256 | ff55619e9e6b5710eee8439c725ab18e10ca6da47d1c6b90c7a43770debd1915 |